Authors

Raymond R. Panko

Abstract

In the spreadsheet error community, both academics and practitioners generally have ignored the rich findings produced by a century of human error research.

These findings can suggest ways to reduce errors; we can then test these suggestions empirically.

In addition, research on human error seems to suggest that several common prescriptions and expectations for reducing errors are likely to be incorrect.

Among the key conclusions from human error research are that thinking is bad, that spreadsheets are not the cause of spreadsheet errors, and that reducing errors is extremely difficult.

Sample

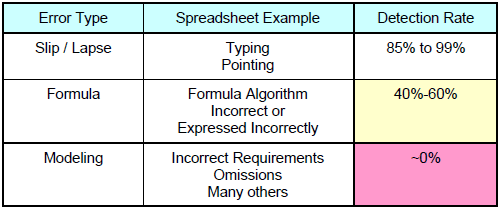

Error detection rates are different for the different types of errors: slip/lapse, formula, and modeling.

This research leads to three fundamental conclusions:

- The problem is not spreadsheets. The real issue is that thinking is bad. We are accurate 96% to 98% of the time when we do think. But when we build spreadsheets with thousands (or even dozens) of root formulas, the issue is not whether there is an error but how many errors there are and how serious they are.

- Making large spreadsheets error free is theoretically and practically impossible and that even reducing errors by 80% to 90% is extremely difficult and will require spending about 30% of project time on testing and inspection.

- Replacing spreadsheets with packages does not eliminate errors and may not even reduce them.

Publication

2007, EuSpRIG

Full article

Thinking is bad: Implications of human error research for spreadsheet research and practice