Authors

Salvatore Aurigemma & Raymond R. Panko

Abstract

Previous spreadsheet inspection experiments have had human subjects look for seeded errors in spreadsheets.

In this study, subjects attempted to find errors in human-developed spreadsheets to avoid the potential artifacts created by error seeding. Human subject success rates were compared to the successful rates for error-flagging by spreadsheet static analysis tools (SSATs) applied to the same spreadsheets.

The human error detection results were comparable to those of studies using error seeding. However, Excel Error Check and Spreadsheet Professional were almost useless for correctly flagging natural (human) errors in this study.

Sample

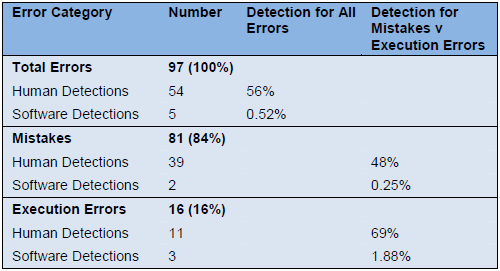

This table gives the results of the two studies. The differences are stark.

Unaided error inspection was able to detect 54 of the 97 errors. This 56% success rate is similar to detection rates in studies of inspection with seeded errors.

In contrast, software-aided detection performed dismally. Only five errors were recorded as being usefully flagged—all by a single subject.

Publication

2010, EuSpRIG

Full article

The detection of human spreadsheet errors by humans versus inspection (auditing) software