Authors

Marc Fisher, Gregg Rothermel, Darren Brown, Mingming Cao, Curtis Cook, & Margaret Burnett

Abstract

Spreadsheet languages, which include commercial spreadsheets and various research systems, have had a substantial impact on end-user computing. Research shows, however, that spreadsheets often contain faults.

Thus, in previous work we presented a methodology that helps spreadsheet users test their spreadsheet formulas. Our empirical studies have shown that end users can use this methodology to test spreadsheets more adequately and efficiently; however, the process of generating test cases can still present a significant impediment.

To address this problem, we have been investigating how to incorporate automated test case generation into our testing methodology in ways that support incremental testing and provide immediate visual feedback. We have used two techniques for generating test cases, one involving random selection and one involving a goal-oriented approach.

We describe these techniques and their integration into our testing environment, and report results of an experiment examining their effectiveness and efficiency.

Sample

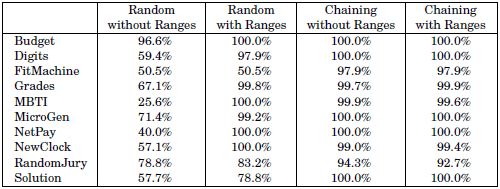

Ultimate effectiveness is simply a percentage measure of how many feasible du-associations in a spreadsheet can be exercised by a method.

The table lists, for each subject spreadsheet, the ultimate effectiveness of Random and Chaining, with and without range information, averaged across 35 runs.

Chaining without range information achieved over 99% average ultimate effectiveness on all but two of the spreadsheets (FitMachine and RandomJury). On these two spreadsheets, the technique achieved average ultimate effectiveness over 97% and 94%, respectively.

Publication

2006, ACM Transactions on Software Engineering and Methodology, Volume 15, Number 2, April, pages 150-194

Full article

Integrating automated test generation into WYSIWYT spreadsheet testing methodology