Authors

Vijay B. Krishna, Curtis Cook, Daniel Keller, Joshua Cantrell, Chris Wallace, & Margaret Burnett

Abstract

Spreadsheets are among the most common form of software in use today. Unlike more traditional forms of software however, spreadsheets are created and maintained by end users with little or no programming experience. As a result, a high percentage of these "programs" contain errors. Unfortunately, software engineering research has for the most part ignored this problem.

We have developed a methodology that is designed to aid end users in developing, testing, and maintaining spreadsheets. The methodology communicates testing information and information about the impact of cell changes to users in a manner that does not require an understanding of formal testing theory or the behind the scenes mechanisms.

This paper presents the results of an empirical study that shows that, during maintenance, end users using our methodology were more accurate in making changes and did a significantly better job of validating their spreadsheets than end users without the methodology.

Sample

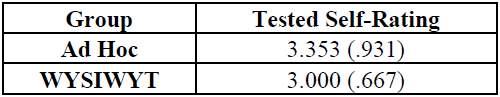

The post session questionnaires asked subjects to rate "on a familiar A-F scale" how well they tested their spreadsheet.

In spite of the fact that 12 of the 17 Ad Hoc subjects did not execute a single test and that 17 of the 19 WYSIWYT subjects executed at least one test case, the Ad Hoc subjects' average testing self-rating was considerably higher than the WYSIWYT subjects' average self-rating.

Publication

2001, IEEE International Conference on Software Maintenance, November, pages 72-81

Full article

Incorporating incremental validation and impact analysis into spreadsheet maintenance