Authors

Felienne Hermans

Abstract

Current testing practices for spreadsheets are ad hoc in nature: spreadsheet users put 'test formulas' in their spreadsheets to validate outcomes.

In this paper we show that this practice is common, by analyzing a large set of spreadsheets from practice to investigate if spreadsheet users are currently testing. In a follow up analysis, we study the test practices found in this set to deeply understand the way in which spreadsheet users test, in lack of formal testing methods.

Subsequently, we describe the Expector approach to extract formulas that are already present in a spreadsheet, presenting these formulas to the user and suggesting improvements, both on the level of individual test formulas as on the spreadsheet as a whole by increasing the coverage of the test formulas.

Finally, we offer support to understand why a test formula is breaking. We end the paper with an example underlining the applicability of our approach.

Sample

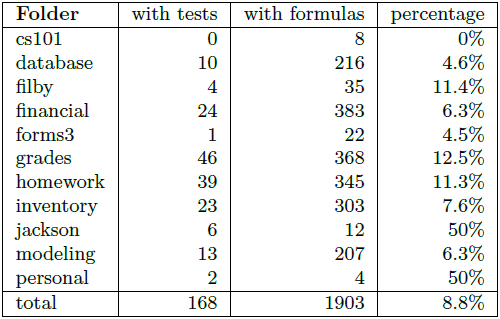

Of the 1,903 spreadsheets in the EUSES corpus that contain formulae, only 8.8% use test formulae. Of the 168 spreadsheets with test formulae, 80% had 3 or less unique test formulae.

Publication

2013, CASCON 2013, November